on algorithm efficiency + the wisdom behind algorithm analysis

why did I have to learn this? why do coding interviews ask about this?

Join me as I unpack the basics algorithm analysis!

It’s officially my first ever publication here, very excited to launch this momentous occasion into the vacuum of the internet and mark it in my bullet journal.

My plan for this newsletter is to, in the words of Paul Millerd, “Write most days, publish most weeks.” I’ll cover a main topic I’ve learned/revised this week in the spirit of the Feynman Technique, sprinkle in some life/project updates, and share interesting tech tidbits from articles I’ve read, events I’ve attended, and share advice from one student to another.

To start this off, I’ll travel back in time to Fall 2023. It was my first ever in person computer science college class and I was excited to get coding. But the first thing we went over was….algorithm analysis. I’m not one to dismiss lessons so I did my best to take algorithm analysis seriously, but if I’m being honest, once the week was up and the lesson covered, I promptly forgot about it. As I embark on a journey of taking CS and Math fundamentals more seriously to become a great Software Engineer, I’ve officially realized why my professor bothered to teach us algorithm analysis in the first place.

In today’s newletter, I’ll go over:

why you should even care about algorithm analysis (with wisdom from the CEO of CodeSignal)

what someone mean’s when they ask you for the complexity of your solution

the coveted O(n) and O(logn)

that and more below ⬇️

☾algorithms☽

I’d already been sitting on this issue of the newsletter for a couple days as I logged onto a Rewriting the Code webinar about CodeSignal Assessments and Imposter Syndrome. It had been a moment since I’d signed up, so I was very excited to remember that THE CEO of CodeSignal, Tigran Sloyan, would be there. He answered many questions and shared his perspective on several things (more on that in my next post). However, when he started talking about solution optimization and complexity, I perked up so fast and took notes because I knew I had to include it in this newsletter. So…

algorithms-why do they matter?

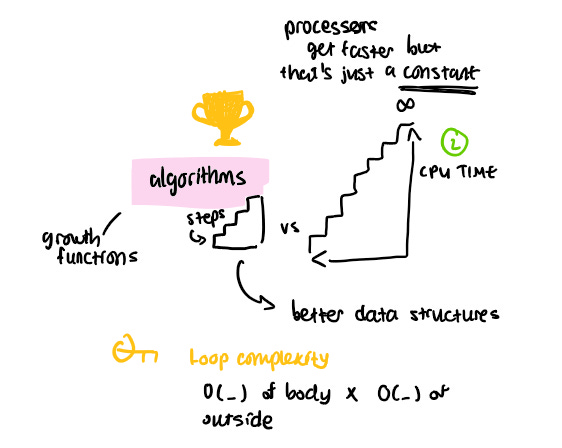

You might think that with how fast processors are getting, algorithm efficiency might matter less and less. The truth is, processors are only one small constant in the grand scheme of computer programming. To summarize Sloyan’s words: algorithm efficiency and solution optimization aren’t irrelevant. Computer scientists for decades have dedicated large chunks of time to optimization and are continuing to do so today. Although our computers might get faster, our data only gets larger.

What matters more than your processor or specs is the algorithms used and how many iterations we force the computer into. It’s like the difference between handing someone a 200pg handbook and a 10page summary. When we implement algorithms or solutions to problems, we ask the computer to use up time and memory. Making trade-offs between the two and finding efficient solutions is why algorithm analysis is so important.

algorithm analysis-what?how?

Algorithm analysis matters especially when using algorithms on a large scale. If, for example, I took 5 minutes to solve a math problem and Cindy took 7, the difference of time after solving one problem wouldn’t be too awful. But if we each had to solve 1000 problems, I would take 5000 minutes and Cindy would take 7000. The difference becomes thousands of minutes! See why analyzing our algorithms matters? Especially if you’re gearing up to start looking for internships or junior level roles in fields like software engineering, you can see one reason companies put so much emphasis on the complexity of your solution during code interviews. Algorithm analysis is all about determining how efficiently your code runs and how you can make it better.

There are two main components to algorithm analysis that I’ll cover: growth functions and Big O notation.

growth functions

Growth functions are made of variables and constants that show the relationship between the size n and the value you want to optimize, the value being t for time complexity or s for space complexity.

Essentially they’re just functions or equations you build by determining each line of code’s complexity (e.g how many times it runs) and then combining like terms. Basic algebra and logic are key here.

take this piece of code in Python for example:

print('Hello world')the print statement runs once, therefore the growth function comes out to be 1

What about this piece of code written in Javascript? (assuming n is some constant)

for(let i = 0; i < n; i++){

print(i);

}The for statement iterates the variable i from 0 to n - 1, running the print statements n times. So if the for loop runs n times and therefore the print statement runs n times, that should come out to be something like this, right?

n (for loop) + n (print statement) → n + n = 2n

Be careful! Remember that loops, for loops included, have a continuation condition which has to evaluated to determine whether or not to continue. Therefore, although the print statement runs n times, the for loop actually runs n + 1 times:

n + n + 1 → 2n + 1

big O notation -

Growth functions are all well and good (and might be on your midterm) but oftentimes, people tend to be more concerned with something called Big O notation also known as the order of growth.

Let’s go back to my growth function for my for loop

2n + 1

When n goes to infinity, the term 2n has the most impact on the behavior of my function. Therefore, I would say that the order of growth is 2n or more generally n, commonly written as O(n)

In summary, the big O notation is determined by highest power/leading term from your growth function. You don’t necessarily need the entire growth function, you just need to keep an eye out for the largest complexity. Big O notation is also usually what people mean when they ask you to describe the complexity of your solution.

common complexities

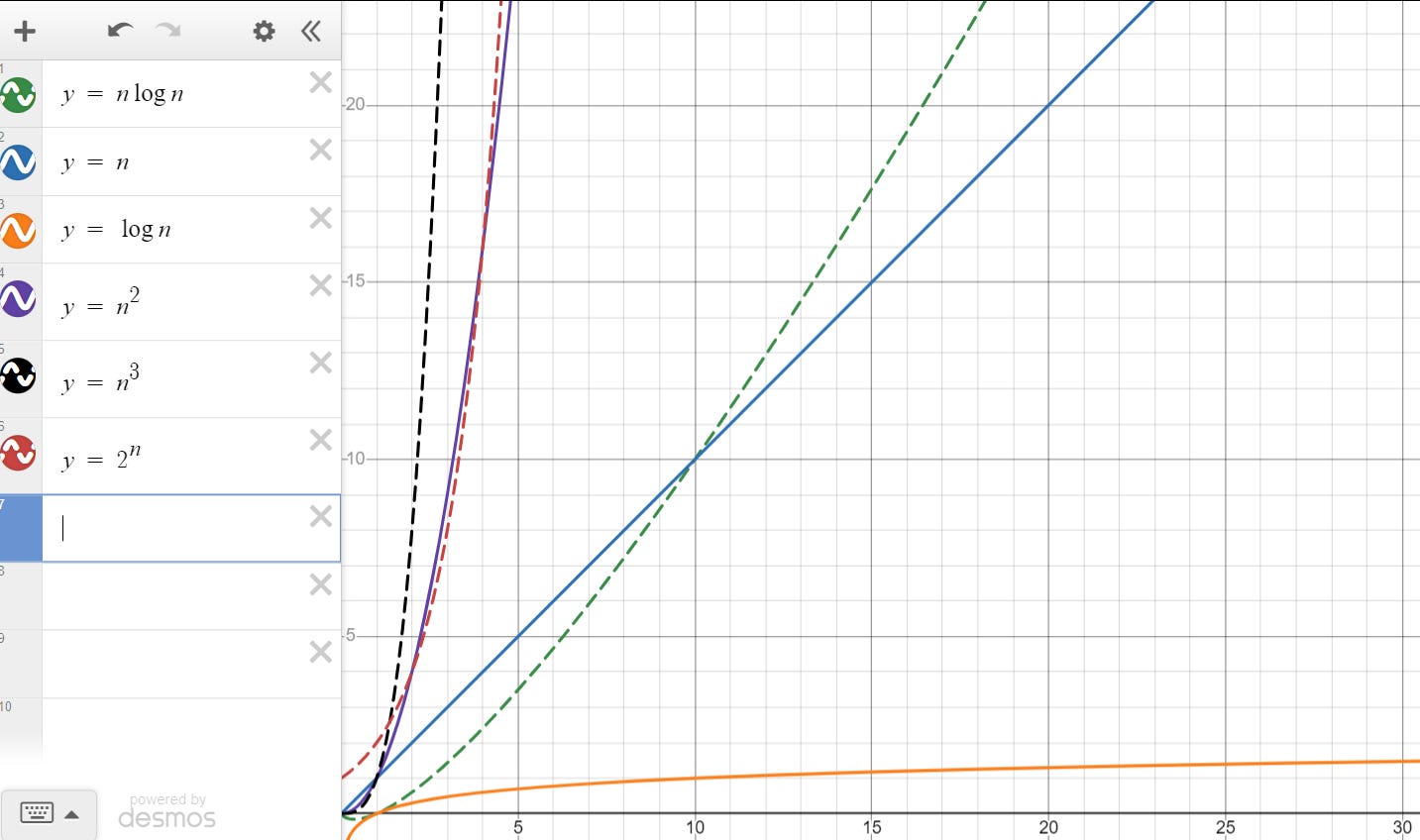

small scale:

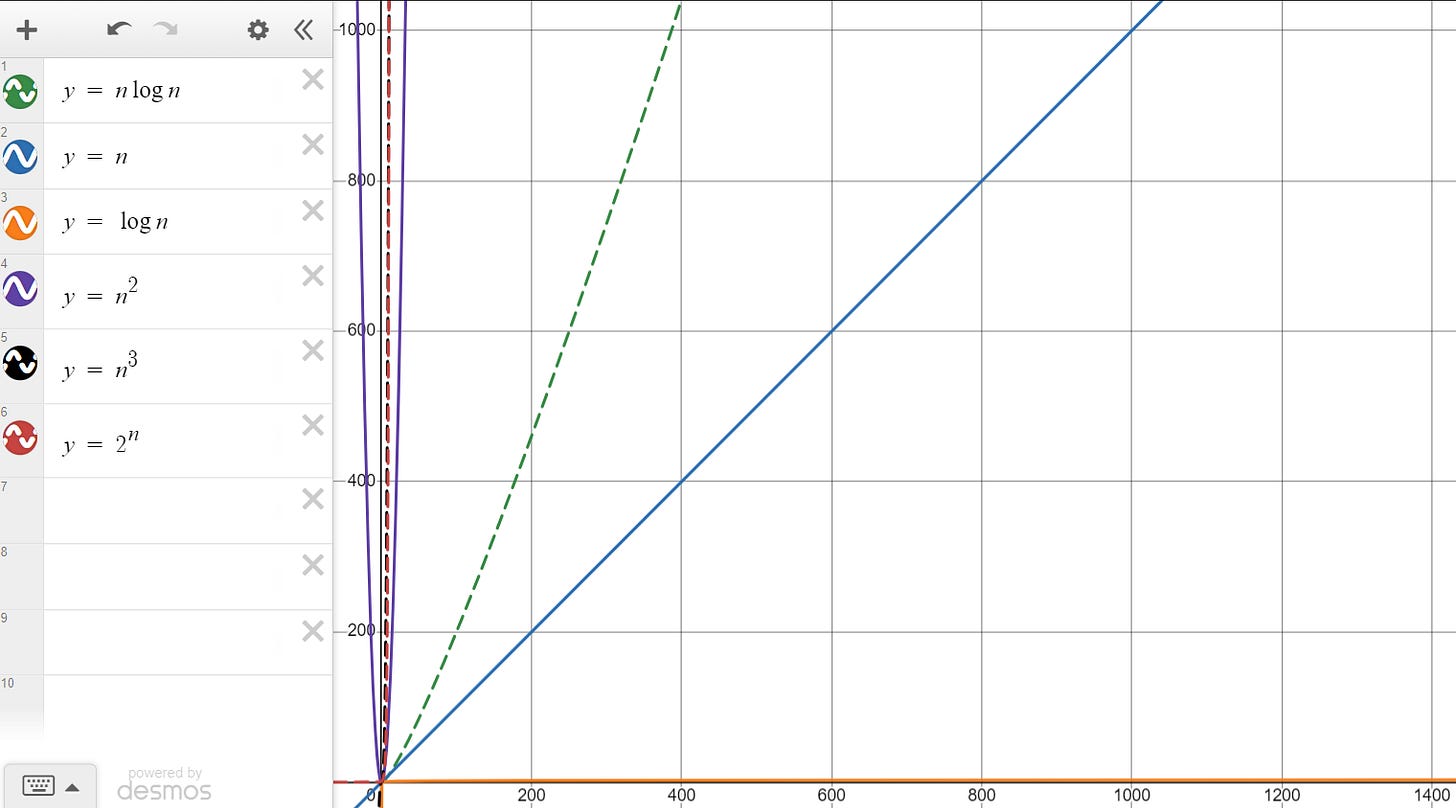

large scale:

Just by glancing at these two charts, it’s clear that some functions aka complexities you really want to stay clear of 👀

It’s also clear that of the common complexities, 2 stand out as being more efficient:

O(n) and O(logn)

O(n)

Essentially, O(n) occurs when the algorithm size/time is linearly proportional to the input n, therefore creating a straight line in the graphs above. It’s nice and reliable, without using up ungodly amounts of space and time.

Of course, there isn’t one set way to achieve O(n). However, we can see a really simple example if we go back to our example from earlier:

for(let i = 0; i < n; i++){

console.log(i);

}

You’ll see that this basic for loop/loop structure is a simple example of an algorithm that grows proportionally to its given input.

But what if…I put a loop…inside the loop…?

nested loops

let’s take a look at this code (// indicates comments, I commented line numbers for clarity):

for(let i = 0; i< n; i++){ //1

for(let j = 0; j < i; j++){ //2

console.log(j); //3

}

}

From our last example, we know that with for loops where i is incremented up til some value n, these loops are iterated n+1 times. The complexity of statements within the formulated are calculated in this way:

n iterations x O(1) statement = statement that is run n amount of times

If we replace the O(1) statement in this equation with # of iterations created by the nested for loop, the equation now looks something like this:

n iterations x (n + 1) iterations = a loop that is run n by n times, aka n^2! Similar logic can be applied to the statement on line 3 to determine that line 3 runs n times, giving us the growth function:

which can be simplifed to:

Since the leading term is now n^2, the complexity is O(n^2).

O(logn)

So far, we’ve been incrementing our for loops by 1, but what if instead we updated our counter variable by division or multiplication?

For example:

for (let count = 1; count < n; count = count * 2)

{

console.log(count);

}

for (let count2 = n; count2 > 0; count = count / 2){

console.log(count2);

}

In this case, the complexity of either case would be

or more generally

other reading

for another awesome explanation, see section 1.2.3 in Structure and Interpretation of Computer Programs, available online

Also check out this video by Abdul Bari, a CS legend:

I always love a good GeeksForGeeks summary. This one’s awesome because it also touches briefly on the complexity of recursion and how to account for complexity when calling functions: https://www.geeksforgeeks.org/how-to-analyse-loops-for-complexity-analysis-of-algorithms/#

sneak peek: how to prep for coding interviews and coding assessments

All this talk about algorithm complexity leads me back to the coding interview. Recently, I’ve been collecting free online resources and working on addressing my weaknesses so I can become a great sofware engineer. As a rising sophomore, my main goal for the upcoming year is to put myself out there and really cement my fundamentals. Some resources are found helpful in my journey so far are:

Zero to offer - A guide by students at Pitt CS : https://pittcs.wiki/zero-to-offer/

Teach Yourself Computer Science: a curriculum made of free resources for those looking to truly understand computer science

For a more in-depth advice on how to get good at Computer Science and how to start preparing for coding interviews, stayed tuned for my next post!

✧project / course updates✧

In other news, I’ve also been working on my front end engineering skills. I focus mainly on the courses provided for free by The Odin Project and am proud to announce I’m officially nearing the end of the Foundations course that covers fundamental HTML, CSS, and JavaScript. My absolute favorite part of the course would have to be learning how to start making use of the command line and using git (also because I feel like an old-time hacker 😎)

A project I’m really proud of at the moment - Learning Malay

Learning Malay is a site I was inspired to make after struggling for a long time to find and keep track of quality resources for learning the Malay Language. The current MVP is still a work in progress but currently has a working home page and research on how to learn ANY language effectively! My next to-dos are to add to my growing library of resources and publish a detailed about page about the language and my own language learning story.

Besides my web dev adventures, I’ve also been reviewing data structures using my old coursework and working through Structure and Interpretation of Computer Programs

my top 3 ✿✿✿

My top 3 moments from this past week-ish

Crocheting decorations for my school bag

my dad gifting me a very cool vintage lamp he found in our basement

posting this!

Thank you so much everyone who made it this far! If you have any feedback at all, please feel free to reply to this email or comment 🫶🏼